Chapter 6: Prompt Engineering and RAG

Chapter Overview

In Chapters 1-5, we explored how Generative AI has begun reshaping the practice of law. We examined how it functions at a high level, surveyed popular models and specialized “legal AI” tools, and discussed the many ways in which it can assist lawyers, beyond just cranking out finished briefs or memos.

Now, we turn to one of the most practical facets of working with AI, Prompt Engineering, the art and science of writing effective instructions that guide AI models to produce useful, relevant, and accurate results. Mastering the fundamentals of prompt engineering can significantly elevate the quality of AI-generated work product, especially in the legal domain where precision and factual correctness are paramount. We will also explore Retrieval-Augmented Generation (RAG), a method of grounding AI responses in external data sources such as case law, statutes, and client documents. RAG is widely considered a key technique for enhancing AI’s accuracy and reducing “hallucinations” (instances where an AI confidently makes up facts or citations).

By the end of this chapter, you should understand:

- Naive vs. Informed Prompts – why “garbage in, garbage out” applies to AI queries, and how structured, context-rich instructions lead to better AI responses.

- AI as Oracle vs. AI as Helpful Assistant – the importance of adopting a collaborative perspective on AI tools.

- Prompt Engineering Frameworks – including RTF (Role-Task-Format), RISEN (Role-Instructions-Steps-End Goal-Narrowing), and CRAFT (Context-Role-Action-Format-Target Audience), each of which helps ensure clarity and consistency in your prompts.

- Retrieval-Augmented Generation (RAG) – how it works, why it’s essential for legal research and document automation, and how naive RAG differs from advanced or “enhanced” RAG.

- Future Trends and Best Practices – advanced topics like meta-prompting (using AI to improve AI prompts), whether prompt engineering is “dead” or alive and well, and how knowledge graph techniques might push reliability even further.

Why Prompt Engineering Matters

Imagine you’re a brand-new associate at a busy law firm. The partner sends you an email that simply says, “Draft a memorandum on the new regulations.” No further details. You’d likely struggle, because you have little sense of which regulations, how long the memo should be, what tone to adopt, or what questions you need to answer. AI models face a similar challenge when given incomplete or vague instructions. They will produce some output, but it might be ill-suited to your precise needs.

Prompt engineering is the antidote. By framing your query with context, specifics, and clarity, you drastically improve the likelihood of a high-quality AI response. In other words, the prompt is how you “communicate” with the AI, just as you would carefully brief a human colleague on an assignment.

Naive vs. Informed Prompts

- Naive Prompt: A short, vague, or generic instruction, such as “Draft a lease” or “What is the law on negligence?” The AI can generate a response, but it may be too broad, incomplete, or even factually incorrect.

- Informed Prompt: A context-rich, detailed instruction specifying jurisdiction, style, length, format, or any other critical parameters. For example, “Draft a one-year residential lease for a rental property in California, including a clause restricting pets, at a monthly rent of $2,000, in a clear and concise style.”

The difference between naive and informed prompts can be dramatic. An informed prompt puts the AI model in the right mindset or perspective to deliver exactly what you need. It minimizes guesswork, reduces the chance of irrelevant or erroneous material, and typically yields more precise results.

Call Out: Key Term – "Hallucination"

Definition: In AI contexts, hallucination refers to an AI-generated assertion that confidently states incorrect or fabricated information. For lawyers, this can be dangerous—such as citing non-existent cases. Good prompt design and techniques like RAG help reduce hallucinations.

Rethinking Our Mindset: AI as Oracle vs. AI as Helpful Assistant

One of the biggest mindset shifts when working with AI is moving away from the notion of the “all-knowing oracle.” If you ask an AI a question, it may respond with supreme confidence, but that does not mean the content is correct.

- Oracle Mindset: The user assumes the AI never errs. This often leads to minimal prompting (“just produce the answer”), blind trust in AI outputs, and potential disasters if the AI “hallucinates.”

- Helpful Assistant Mindset: The user sees AI as a bright research associate or paralegal, capable and resourceful, but needing direction, supervision, and verification.

Adopting the assistant mindset means you carefully craft your prompts, double-check unusual results, and ask follow-up questions. It reduces the risk of inadvertently filing briefs with made-up citations (as happened in the notorious “ChatGPT citation / Schwartz” case, where attorneys faced sanctions after relying on AI to invent case law).

Practice Pointer: Iterative Prompting

After receiving an AI-generated answer, consider prompting it further: “Please list your sources,” or “Are you certain about each case citation?” This may clarify whether the answer is grounded in real data or is possibly hallucinated, but is no replacement for your professional judgment.

Foundational Concepts in Prompt Engineering

The “Garbage In, Garbage Out” Principle

A large language model (LLM) like GPT or Claude is essentially a “prediction machine” that generates its best guess as to what words should come next. If your input prompt (“garbage in”) is vague or riddled with ambiguities, the output (“garbage out”) will often be irrelevant, low-quality, or even completely incorrect.

By contrast, a high-quality prompt makes the AI more likely to generate a relevant, correct, and well-structured answer. This concept is so important that entire strategies— prompt frameworks — have been developed to ensure we consistently feed LLMs with the “right” kind of instructions.

Essential Prompt Engineering Principles

Below is a distilled set of principles that leading authors and practitioners emphasize:

- Give Clear Direction

- Tell the AI exactly what you want and the approach to take. For instance, specify the subject matter, tone, or depth required.

- Specify Format

- If you need bullet points, an outline, or a table, say so. LLMs will follow explicit format instructions surprisingly well.

- Provide Examples

- Consider giving short “sample outputs” if you want the AI to mimic a certain style or structure.

- Evaluate Quality

- After the AI responds, scrutinize the answer. Ask follow-up questions or have the AI critique its own response.

- Divide Labor

- For complex tasks, break them down into smaller steps or multiple prompts. This is sometimes called “chain-of-thought” or “multi-step prompting.”

In the legal context, these principles translate to specific best practices, such as asking for citations or clarifications, dividing a large research question into smaller sub-questions, and providing relevant legal context up front (e.g., “Focus on federal law,” “The dispute is about breach of contract, not tort claims,” etc.).

Example and Scenario

Naive Prompt:

Please provide a memo on employment discrimination.

Likely AI Response:

A generic summary of what employment discrimination means, perhaps referencing Title VII, maybe mentioning the EEOC, but lacking detail on jurisdiction or new developments.

Informed Prompt (Using Some Prompt Engineering Principles):

You are a mid-level associate in a U.S. law firm advising a tech startup with 50 employees. Draft a 2-page memorandum explaining the key aspects of Title VII employment discrimination claims (especially gender discrimination) in plain English. Focus on potential liability for employers with remote workers in multiple states. Include a bullet-point list of action items for compliance.

Likely AI Response:

A more structured memorandum discussing Title VII, any relevant nuances about multi-state liability, plus bullet points on practical steps (such as anti-discrimination policies, training, record-keeping). This is far more specific and actionable.

Single-Shot Prompting vs. Few-Shot Prompting

Single-Shot Prompting

When you give an AI model just one query, without providing any examples, demonstrations, or sample answers beforehand, you are engaging in single-shot prompting. Essentially, you are relying on the model’s general training and ability to “figure out” your request from a single instruction. For instance:

Explain the concept of consideration in contract law.

This kind of prompt can still yield useful responses, especially for straightforward questions. However, it places the entire burden on the AI to interpret the task in the way you intended.

Few-Shot Prompting

In few-shot prompting, you include one or more examples within your prompt to show the AI what kind of answer you’re looking for. For instance, you might supply a sample scenario and its associated correct response, then ask for a new response in the same format. It’s like giving the AI a small set of “worked examples” so it can better infer your expectations.

Below is an example of how I want you to explain contract law concepts:

Example:

Question: What is ‘offer’ in contract law?

Answer: An offer is a clear proposal made by one party that, if accepted, creates a binding agreement...

Now, please explain the concept of ‘consideration’ in a similar style.

By doing so, you help the AI understand not just what to talk about but also how to frame or structure the answer.

Prompt Engineering Frameworks

Many professionals, across tech, education, and law, have introduced frameworks to help users create structured, high-quality prompts. Below, we explore three widely cited frameworks: RTF, RISEN, and CRAFT. Keep in mind that use of these frameworks is not required, but each aims to instill best practices and reduce ambiguity and guide you step-by-step in shaping your AI query. These structured approaches are similar, in a way, to the use of IRAC for organizing legal analysis.

RTF (Role–Task–Format)

This is a concise framework where you identify:

- Role: The role or perspective the AI should adopt (e.g., “You are an experienced real estate attorney”).

- Task: What you want the AI to do (e.g., “Draft a short clause preventing subletting in a residential lease”).

- Format: How you want the output (e.g., “Produce it as a bullet-point list of clauses”).

Practice Pointer:

If you’re short on time, RTF is a quick go-to. A single sentence can incorporate all three elements.

Example (RTF applied):

You are an experienced real estate attorney.

Draft a standard subletting prohibition clause for a residential lease.

Provide the text in a single paragraph, suitable for a Word document.

RISEN (Role–Instructions–Steps–End Goal–Narrowing)

A slightly more detailed approach:

- Role: Who the AI is or which perspective it should adopt.

- Instructions: The overall context or scenario.

- Steps: The specific steps or method you want the AI to follow.

- End Goal: A clear description of the final deliverable or outcome you expect.

- Narrowing: Any constraints (word limits, jurisdiction, level of detail, etc.).

Why Use RISEN?

If you have a multi-part task, like drafting a research plan, summarizing multiple statutes, or preparing an outline for a complex legal strategy, RISEN helps break it down.

Example (RISEN applied):

Role: You are an expert contract attorney.

Instructions: We need to draft a multi-clause commercial lease for a retail property in New York.

Steps:

1. Outline the key clauses typically included in such leases.

2. Provide a plain-language summary of each clause.

3. Merge them into a cohesive final document.

End Goal: A draft lease that is ready for a senior partner’s review.

Narrowing: Maximum 1,000 words, and focus only on state-level requirements in New York (do not discuss federal law).

CRAFT (Context–Role–Action–Format–Target Audience)

CRAFT adds an emphasis on the “Target Audience,” ensuring the style, tone, and complexity align with who will read the output.

- Context: Background or situation.

- Role: The AI’s perspective.

- Action: What you want the AI to do.

- Format: The structure or style of the output.

- Target Audience: Who the output is aimed at. This can significantly affect tone and complexity.

Example (CRAFT applied):

Context: A small nonprofit organization is seeking advice on data protection rules.

Role: You are a privacy law expert.

Action: Explain the organization’s obligations under the California Consumer Privacy Act (CCPA).

Format: Provide a concise bullet-point overview with recommendations.

Target Audience: A board of directors with limited legal background.

By adding the target audience, you signal the AI to avoid overly technical language and focus on practical guidance.

Call Out: Why Frameworks Help

Using frameworks systematically can cut down on rework. By ensuring you consistently provide context, specify the AI’s role, define the precise task, and clarify the expected format, you reduce the guesswork and back-and-forth. In a law firm setting, standardizing prompts with a framework can save significant time across multiple attorneys and practice areas.

Advanced Prompting: Meta-Prompting, Iterative Refinement and Bootstrapping

What is Meta-Prompting?

Meta-prompting involves using an AI model to help you craft or refine your own prompts. Essentially, you ask the AI to suggest the best way to instruct itself. For instance:

AI, I want to research the enforceability of non-compete clauses

in New York and California for a tech start-up.

How should I prompt you to get the most accurate, step-by-step analysis?

The AI might respond with a recommended structure for the query (e.g., “Please break down your question by jurisdiction, specify the term of the non-compete, mention the type of employees, etc.”). You then take that advice, craft a final prompt, and feed it back into the model. This approach can be especially useful if you’re new to AI or dealing with a novel area of law.

Iterative Prompting

Rarely does a single prompt suffice for complex legal tasks. Instead, consider an iterative approach:

- Draft an initial prompt.

- Review the AI’s output.

- Revise and refine your prompt or ask clarifying questions.

- Repeat until you achieve a satisfactory answer.

This mirrors how you might revise a memo after receiving a first draft from a junior associate. Each iteration hones in on the final deliverable.

Practice Pointer:

When you receive an incomplete or off-topic AI response, don’t just abandon the process. Instead, clarify the instruction: “That’s not quite it. Please focus on analyzing X case and explain how it contradicts Y statute in 300 words or less.”

Bootstrapping

A powerful emerging practice called bootstrapping takes the idea of iterative prompting a step further by involving multiple AI models, each specializing in a different phase of the creative or problem-solving process. Rather than relying on one model to handle everything from brainstorming to final polish, bootstrapping breaks the task into logical stages, passing the output of each stage to another model that is more directly suited to the next step.

Here’s a simplified example showing how bootstrapping might work in motion practice:

Outline Generation (Model A) e.g., GPT-4o

- Idea: You start with a concept, such as filing a motion to dismiss for lack of personal jurisdiction. Prompt the first AI model—well-suited for planning and organization—to transform this rough idea into a structured, point-by-point outline:

“Take this rough idea for a motion to dismiss and create a detailed outline, including headings for procedural background, legal standard, argument sections, and conclusion.” - Output: A comprehensive blueprint of the motion, highlighting the main arguments, relevant sub-issues, and potential case citations. This ensures you’ve captured the essential points before any extensive drafting begins.

- Idea: You start with a concept, such as filing a motion to dismiss for lack of personal jurisdiction. Prompt the first AI model—well-suited for planning and organization—to transform this rough idea into a structured, point-by-point outline:

Drafting the Motion (Model B) e.g., o1

- Implementation: Next, you pass the outline from Model A to a second AI model, specialized in formal legal drafting or text generation:

“Using this outline, draft a formal motion to dismiss for lack of personal jurisdiction, incorporating legal citations where appropriate.” - Output: A polished, full-length motion that follows your established structure, complete with cited authorities and procedural elements. Model B handles the heavy lifting of turning an outline into a cohesive legal document.

- Implementation: Next, you pass the outline from Model A to a second AI model, specialized in formal legal drafting or text generation:

Argument Testing and Role-Playing (Model C) e.g., Claude Sonnet 3.5

- Refinement: Finally, hand the drafted motion to a third AI model skilled in debate, role-playing, or generating counter-arguments. Prompt it to simulate opposing counsel or a skeptical judge:

“Adopt the role of opposing counsel and respond to each section of this motion with potential counter-arguments. Then switch perspective to a judge and note possible questions or concerns.” - Output: A list of rebuttals and pointed queries that help you identify weak spots. You can refine your motion, adjust arguments, or add clarifications before filing. This stage mimics a “moot court” or mock debate session, making your argument more battle-tested.

- Refinement: Finally, hand the drafted motion to a third AI model skilled in debate, role-playing, or generating counter-arguments. Prompt it to simulate opposing counsel or a skeptical judge:

In each of these steps, the output from one AI model becomes the input to the next. By matching each model’s strengths (outlining, drafting, advocacy) to specific tasks, you limit errors and produce a higher-quality final product. It’s like working with a multi-specialty legal team: one attorney organizes the case strategy, another drafts the documents, and a third tests arguments under adversarial conditions. This structured bootstrapping process gives you a more thorough, well-rounded approach to legal drafting and advocacy.

Is Prompt Engineering Dead?

A common question arises: “Aren’t AI models becoming so advanced that fancy prompts aren’t needed?” Indeed, newer models like GPT-4o or Claude Sonnet 3.5 are better at inferring context from short, casual instructions. Sometimes a simple prompt, “Summarize this complaint”— produces an acceptable result.

However, a “one-size-fits-all” approach is risky in legal contexts. Even advanced models can produce incomplete or erroneous summaries if your instructions lack specificity. Moreover, specialized tasks, like drafting a multi-jurisdictional contract or explaining a new regulation to a non-legal audience, still benefit from structured prompts.

Bottom line: Prompt engineering isn’t going away; it’s evolving. While casual prompts might suffice for basic tasks, complex legal work demands the nuance and reliability that come from well-crafted instructions.

Call Out: Myth vs. Reality

- Myth: “Prompt engineering is obsolete because AI can figure out everything on its own.”

- Reality: Large language models rely heavily on how you present your question or directive. In law, a single misdirection can lead to omitted details, overlooked cases, or misunderstood contexts.

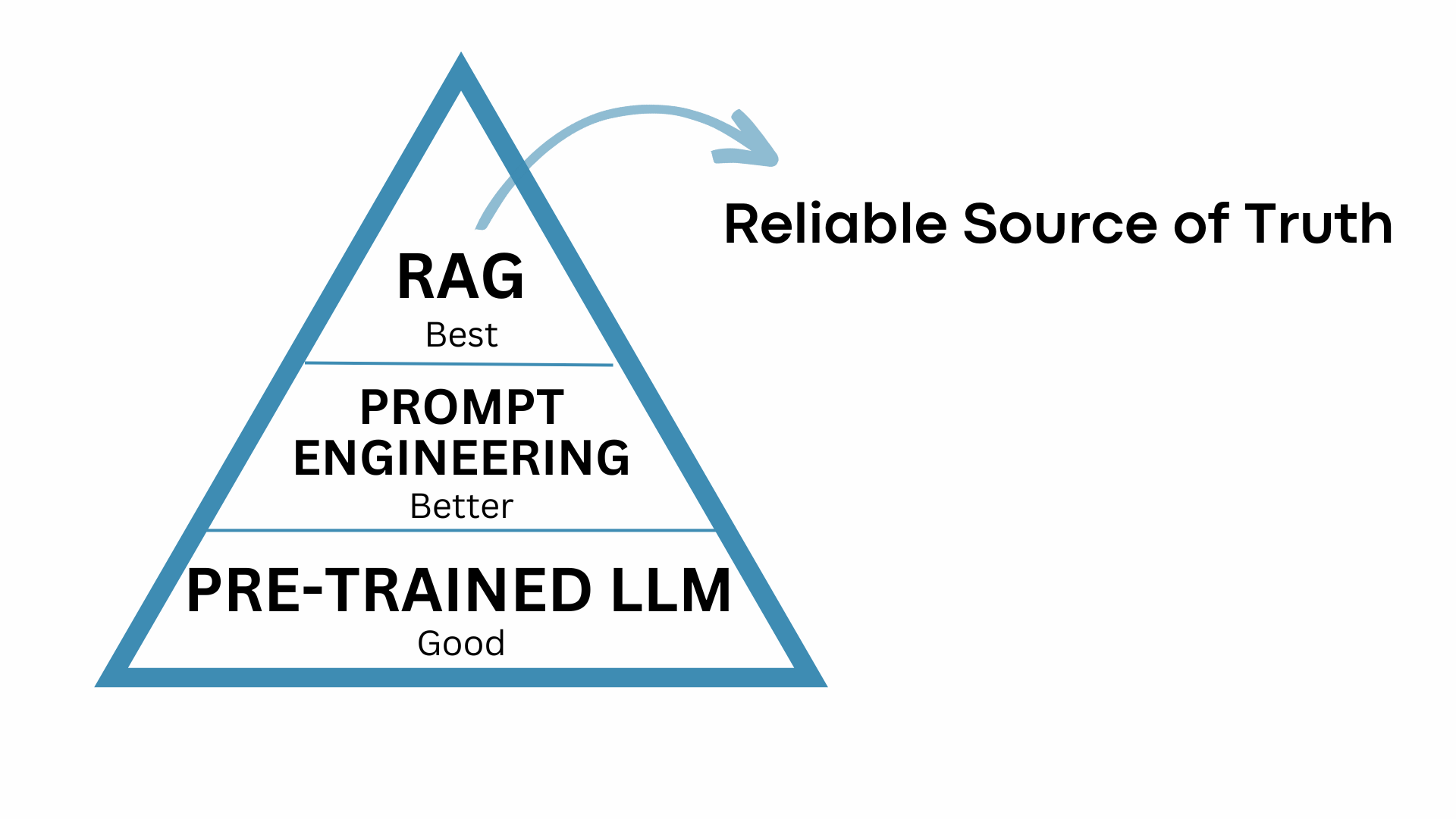

Retrieval-Augmented Generation (RAG)

So far, we’ve focused on improving prompts to get better results from an AI’s internal “learned” knowledge. But many legal tasks require the AI to reference specific data—like the text of a contract, a set of case law, or newly passed legislation that did not exist during the AI’s training. Retrieval-Augmented Generation (RAG) solves this problem by allowing the AI to fetch external, up-to-date information before producing an answer, kind of like taking an open-book test.

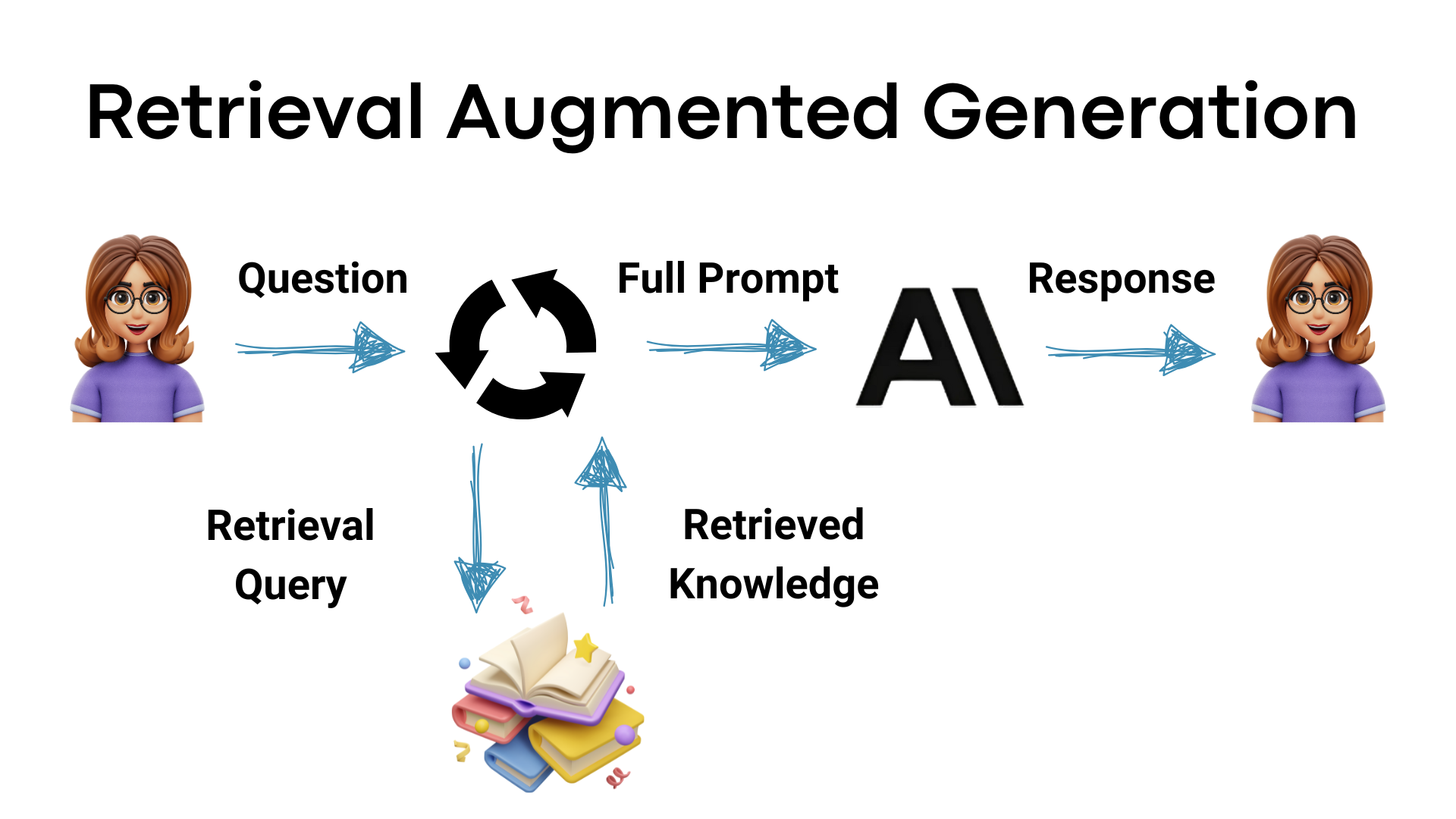

How RAG Works

- Retrieval: The system takes your query and searches a designated repository or database (e.g., a vector database of client documents, a law library, or recent legislative texts).

- Augmentation: Relevant snippets from the repository are fed into the AI model along with your query.

- Generation: The model produces a response that incorporates the retrieved text, thus “grounding” its answer in real, external data.

With RAG, the AI isn’t forced to rely solely on memory or guesswork—it can cite direct references. This significantly reduces hallucinations and increases factual accuracy.

Naive RAG vs. Enhanced RAG

Naive RAG typically follows a straightforward, one-step process: the user’s query triggers a single retrieval pass from the database or document repository, and whatever the system finds is passed directly to the AI model. While this is simpler to implement, it can be limiting for more complex or nuanced questions—especially those that involve multiple issues or require analysis of different types of documents. Because naive RAG doesn’t break the query into sub-queries or refine its search results further, there’s a risk of missing key details, returning irrelevant documents, or failing to fully address multi-part problems.

Enhanced RAG can take many forms, but one example is a system that can break down the user’s larger question into smaller sub-queries, each targeting a specific piece of information or a distinct part of the user’s overall request. For instance, if the user wants a comparative analysis of two different statutes, the system might run separate searches for each statute, gather the relevant documents, and then synthesize the findings. This approach ensures that each part of a complex question is thoroughly addressed rather than relying on a single search that might overlook critical details.

In addition, enhanced RAG systems may use a technique called reranking, where the system retrieves multiple candidate documents but then scores these documents according to how likely they are to be relevant. Only the top-scoring (i.e., most pertinent) documents are ultimately passed along to the AI model. By reranking the results, the system helps filter out irrelevant or lower-quality texts, improving the overall accuracy and clarity of the AI’s final response.

Practice Pointer:

When dealing with complex legal queries—like “Compare the privacy regulations of State A and State B, and cite any major changes in the last year”—an enhanced RAG system might be best. It can retrieve the relevant statutes and also locate the legislative history indicating recent amendments.

Call Out: Key Term – "Vector Database"

Instead of searching documents by plain-text keywords alone, modern RAG often uses vector embeddings to find semantically similar content. This means the AI can locate relevant passages even if the query uses synonyms or related phrases not found verbatim in the document. The system “embeds” both the query and the documents into numerical vectors, then looks for the closest matches.

Why RAG Boosts Accuracy and Reliability

Reduced Hallucinations

The most obvious benefit of RAG is that the AI can directly quote or paraphrase the retrieved source, lowering the odds of fabricating case names or statutory language. For instance, if the AI’s final output references a specific code section, it likely came from the actual text retrieved, rather than an AI “guess.”Up-to-Date Information

Static AI models often have a “knowledge cutoff date” (the year when their training data ended). If you’re researching a brand-new case or statutory amendment, a standard AI model might not have it. RAG taps real-time or frequently updated databases, ensuring the AI’s final answer reflects the latest legal developments.Domain-Specific Expertise

Want your AI to become an “expert” in maritime law, family law, or niche local regulations? Provide a curated repository of relevant sources. RAG then uses those domain-specific texts to bolster the AI’s responses, without requiring a specialized, custom-trained model.Transparent Citations and Verification

Many RAG-based systems offer references to the exact documents or passages used in generating the answer. Lawyers can then verify each statement. This is vital for professional or academic settings where traceability matters.

Real-World Applications of Prompt Engineering & RAG in Law

Legal Research Platforms

Several legal technology platforms (e.g., Casetext, Lexis+ AI) now integrate RAG. When you type a query, these platforms retrieve relevant cases, statutes, or secondary sources and feed them to the LLM. The answer then weaves in the exact language from those references. This approach helps ensure that you don’t get non-existent or outdated cites—though you should still confirm their validity.

Example Scenario:

A user queries, “What is the controlling standard for summary judgment in federal court, and has it changed since 2022?”

The system retrieves FRCP 56 and relevant appellate decisions from 2022–2023.

The AI references those materials explicitly, quoting the updated clarifications.

The user sees footnotes or in-text references to the actual opinions, facilitating a quick check.

Contract Drafting and Review

Law firms use prompt engineering frameworks, often behind a user-friendly interface, to produce standard contract templates (NDAs, employment agreements, etc.). With RAG, the AI can also reference a bank of sample clauses or newly passed laws that must be integrated.

Practice Pointer:

When drafting contracts that must align with particular state statutes, use RAG to ensure the exact statutory language is included or cited appropriately. For instance, “AI, retrieve the relevant portion of the California Civil Code governing security deposits, then incorporate it into this lease.”

Note: This only works if your AI platform has access to the text of the California Civil Code as its source of ground truth.

Document Summaries in Litigation

Large-scale litigation often involves mountains of documents. AI-based e-discovery tools can embed these documents in a vector database. Through RAG, an attorney can ask, “Which emails discuss ‘Project Tiger’ and potential budget overruns?” The system retrieves those emails and uses the LLM to summarize them, pinpointing crucial evidence.

This step is typically repeated iteratively:

- Find the relevant documents via retrieval.

- Summarize them with the AI.

- Ask clarifying follow-up questions or refine your queries.

- Potentially run the process again with narrower or broader criteria.

Brief Analysis

Some advanced tools allow you to upload an opponent’s brief and ask the AI for a critique or identification of weaknesses. RAG helps the AI reference controlling law. The prompt might read:

Analyze the attached brief. Identify any misquotes or contradictory points, retrieve relevant cases from the database that challenge the brief’s main arguments, and list them in bullet form.

In response, the AI can highlight each contested point, citing the actual case language from your repository.

Example Scenario: RAG in Action – Tenant Law

A local housing authority wants a Q&A chatbot to handle common tenant-landlord disputes. They embed relevant state statutes and forms in a vector database. The chatbot is then configured with RAG:

Tenant asks, “What do I do if my landlord never returns my security deposit in Texas?”

The system retrieves the relevant part of the Texas Property Code.

The AI references that code in plain English, providing a step-by-step approach and even offering a link to the official tenant complaint form (also stored in the repository).

The synergy of well-structured prompts (ensuring the AI stays on topic) and RAG (pulling the legal text) creates a far more reliable self-help tool than a generic, free-form chatbot.

Future Directions: Beyond RAG?

While RAG is currently the cutting-edge approach to making AI answers more reliable, some developers are already combining it with knowledge graph techniques (sometimes called “Knowledge-Augmented Generation” or KAG) to further reduce hallucinations. Instead of (or in addition to) retrieving raw text, the AI also queries structured data—like a legal knowledge graph that outlines relationships between statutes, regulations, and cases. This structured approach can help the AI “understand” that Case B overruled Case A, or that an amendment changed a certain statute in 2023.

Nevertheless, for most practical legal applications today, a robust RAG pipeline—plus sound prompt engineering—goes a long way toward bridging the gap between a model’s general knowledge and the specific, up-to-the-minute facts lawyers need.

Putting It All Together: Examples and Best Practices

Below is a consolidated workflow for combining Prompt Engineering and Retrieval-Augmented Generation when tackling a typical legal research or drafting problem:

- Define Your Goal and Context

- Example: “I need a memo on changes to landlord-tenant law in Illinois specifically about eviction procedures and notice requirements.”

- Craft a Structured Prompt (Using CRAFT, RTF, or RISEN)

- Role (R): “You are a real estate litigator in Illinois…”

- Task (T): “Draft a 1-2 page memo…”

- Format (F): “Present it in bullet-point paragraphs, include case citations.”

- Role (R): “You are a real estate litigator in Illinois…”

- Enable Retrieval

- Make sure the AI is set to use your Illinois landlord-tenant statutes database or relevant case law.

- Make sure the AI is set to use your Illinois landlord-tenant statutes database or relevant case law.

- Check the Output

- Evaluate the results. Did it mention outdated or inapplicable codes? Ask for self-critique or verify citations.

- Evaluate the results. Did it mention outdated or inapplicable codes? Ask for self-critique or verify citations.

- Refine or Follow Up

- “Please highlight any major changes enacted after January 2023,” or “Provide direct quotes from the statutory amendments.”

- “Please highlight any major changes enacted after January 2023,” or “Provide direct quotes from the statutory amendments.”

- Finalize

- Accept the final draft, but always confirm critical details independently if used in a real legal context.

Practice Pointer:

For important tasks, treat the AI like you would an assistant: review, verify, and correct. Do not assume it’s 100% correct just because it looks well-formatted.

Chapter Recap

In this chapter, we took a close look at prompt engineering and retrieval-augmented generation (RAG), two critical building blocks for using AI responsibly and effectively in legal work. We began by discussing how the way you phrase a question (or “prompt”) greatly impacts the AI’s response. Then, we examined frameworks that help structure prompts, explained how meta-prompting can refine them, and explored how RAG leverages external data to reduce errors and stay current. Here are the key takeaways:

- Prompt Engineering is vital because how you phrase your question directly influences the AI’s output. Naive prompts yield generic or even incorrect answers, whereas informed prompts guide the AI effectively.

- Frameworks like RTF, RISEN, and CRAFT provide simple, repeatable structures for drafting prompts that include role, context, format, and constraints.

- Meta-Prompting allows you to use AI to refine your own prompts. Iterative refinement helps you zero in on the best approach for complicated tasks.

- Retrieval-Augmented Generation (RAG) grounds the AI’s output in actual documents, reducing hallucinations and enabling up-to-date, domain-specific information.

- Naive RAG is a single-shot approach, while enhanced RAG breaks down queries or uses advanced ranking to find the most relevant documents.

- Real-World Use Cases in legal research, contract drafting, document review, and chatbots show how combining robust prompt design with RAG can significantly improve reliability and efficiency.

- Looking Ahead: More advanced methods, including knowledge graphs or “Knowledge-Augmented Generation,” aim to push accuracy and consistency even further, but for now, a well-structured RAG approach plus proper prompt engineering is a powerful baseline.

By blending sound prompt engineering with retrieval-based solutions, lawyers and law students can unlock AI’s potential without sacrificing accuracy or thoroughness.

Final Thoughts

Prompt engineering is about effective communication, the same skill lawyers use every day when writing briefs or explaining issues to clients. The difference is that you’re communicating with an AI model, which relies on precise instructions to stay on track. Meanwhile, RAG ensures the AI doesn’t have to rely purely on “memory,” but can consult the actual text of statutes, opinions, or documents.

Think of an AI model as an enthusiastic law clerk who can read and write at astonishing speed but may sometimes misunderstand what you want unless you clarify. It can become your research assistant, summarizing volumes of documents or drafting early versions of memos and contracts. But it’s only as good as the context and direction you provide.

As you move on in your legal career, these tools will likely be refined and become even more integral. Your ability to guide them with well-considered prompts and ensure they draw on the correct sources will be a key differentiator, enabling you to work faster, more accurately, and with greater confidence.

What’s Next?

In Chapter 7, we’ll zoom out from the mechanics of AI interaction and look at the broader implications for the legal profession. How are law firms rethinking their business models? What are the ethical and practical concerns around billing clients for AI-assisted work? Could AI disrupt certain practice areas entirely, or will it simply reshape them? We’ll explore these questions and more in subsequent chapters, examining how the rise of AI tools influences everything from client relationships to legal tech startups, access to justice initiatives, and regulatory changes.

References

- Phoenix, J., & Taylor, M. (2024). Prompt Engineering for Generative AI. O'Reilly.

- Bourne, K. (2024). Unlocking Data with Generative AI and RAG. packt.

- Bouchard, L., & Peters, L. (2024). Building LLMs for Production. Towards AI.